[ad_1]

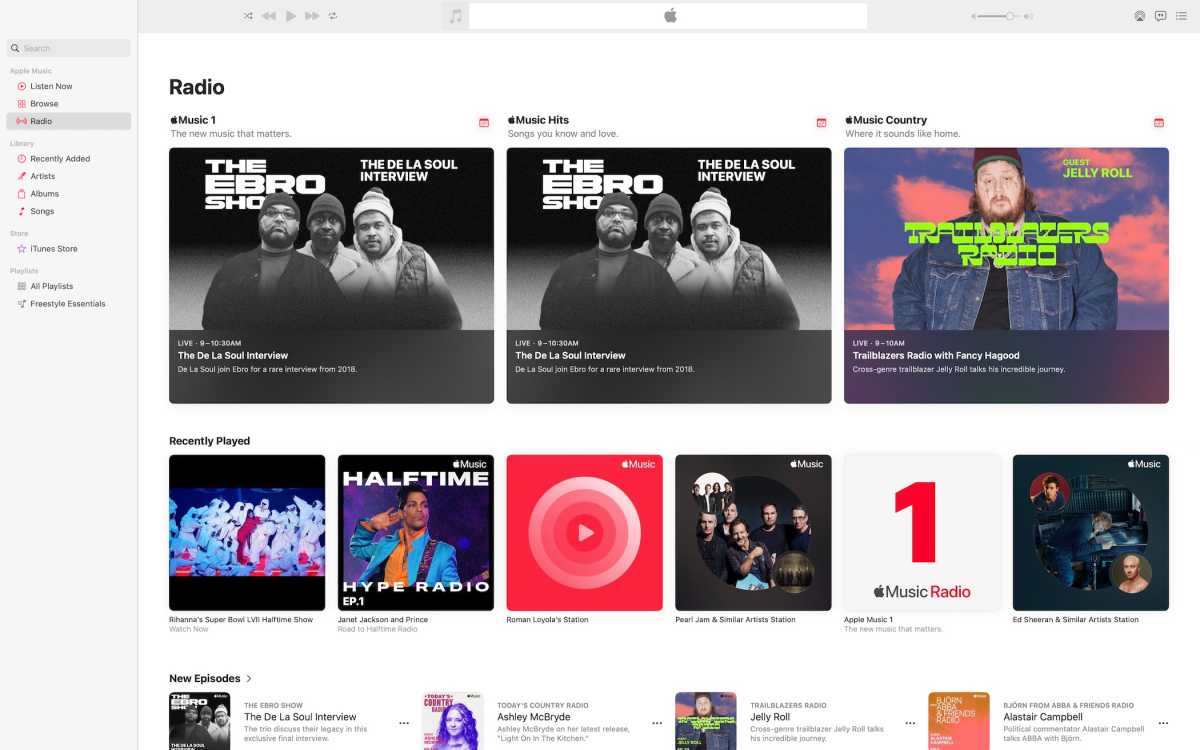

Over the last two decades, technology has reinvented much of what we do in our everyday lives, but the first major domino to fall was probably the advent of digital music. Next month will mark twenty years since the launch of Apple’s iTunes Store (fun fact: a birthday shared with yours truly), which, though not the first way to obtain digital music online, was certainly the most far-reaching.

The digital music experience has certainly changed in the intervening years, especially with the rise of streaming over the past decade, but when it comes to Apple’s take on the act of listening to music, well, there are some things that frankly haven’t changed enough. It sometimes feels like Apple believes that digital music is a solved problem, with the company sitting back and dusting off its hands, but there are definitely places where the music listening experience could be improved.

Not so gently down the stream

In 2019, Apple split off its venerable iTunes app on the Mac into three separate apps: Music, TV, and Podcasts. While some look back at the iTunes era with nostalgia, I’m not going to sugarcoat it: iTunes had become a hot mess. In theory, splitting these off into disparate apps related to specific types of media was a good idea: people don’t want to watch TV shows or listen to podcasts in the same way that they listen to music.

In the implementation, however, the macOS Music app is basically the former iTunes app with Apple Music’s streaming functionality bolted on. While being able to include both tracks from your personal library and Apple Music in one unified interface has its benefits, especially when it comes to ease of use, it can sometimes feel like Apple’s performing some clever legerdemain. For example, one of my biggest frustrations is discovering that a specific track from an album that I’ve added to my library is unavailable because of streaming rights. Why just a specific track? It’s almost always unclear–but it does put paid to the idea that music in your library is actually in your library.

Foundry

That’s just one example of where this melding doesn’t always work; there are plenty of others, including matching an explicit version of a song to a clean version (or vice versa), ending up with split albums because of metadata problems, and just plain getting the wrong version of a song (live instead of studio, for example). Critics of the Music app will no doubt have many other points to add on here, and this could easily devolve into a piece entirely on its shortcomings, but let’s talk about a couple of other glaring issues.

Look ma, no handoff!

I’m going to shamelessly crib this one from my friend and colleague Joe Rosensteel, who recently penned an excellent article on the many problems with the Music app: why hasn’t Apple implemented Handoff for music?

If you’ve forgotten what Handoff is, it’s one of Apple’s Continuity features (a catch-all name for an increasingly expansive suite of functionality), which are supposed to make it easy to move a task between your Apple devices. If you’ve ever started writing an email on your iPad and then turned to your Mac and seen a second Mail icon in the Dock, that’s Handoff. (Frankly, sometimes I can’t get it to go away, especially with apps from my Apple Watch.)

But there exists no similar functionality for music. If I pause a song I’m playing on my Mac and want to pick it up on my iPhone–an analog of which was performed in the very first ad for the iPod in 2001, I have to launch the Music app on my phone, find the track, and skip ahead to where it was on the Mac. Apple’s Podcasts app has this right–sync the playhead position across devices, or at least let users opt into that syncing. The closest Apple has gotten is letting you transfer music from a phone to a HomePod by bringing them close together.

This leads us to another big issue.

The AirPlay’s not the thing

AirPlay is a mess. It’s not even a hot mess; it’s just a mess. A few years ago, around the time that Apple launched the first HomePod, the company changed the way AirPlay worked–sort of. Formerly, all AirPlay speakers were treated in much the same way: as basically an external speaker for music playing from your device, whether it was a Mac, iPhone, iPad, etc. It behaved more or less as though you switched from listening on headphones to listening on a built-in speaker.

However, when the HomePods launched, with their ability to play music themselves without another device, Apple decided to treat them differently in AirPlay. Instead, when you start playing music on your device and then AirPlay it to a HomePod, it shifts the currently playing track to the HomePod’s own internal playlist, as though you’ve used Siri to tell the speaker to play a song.

IDG

This has caused me no end of frustration, especially when I start listening to an album on my phone, AirPlay it to my HomePod mini, and then go back to my phone only to discover that it’s still on the same track it was when I first AirPlayed it.

Now, instead of AirPlaying, you can control playback directly on a HomePod by using the AirPlay menu on an iOS device and scrolling all the way down to Control Other Speakers & TVs…but then you’re essentially kicked into a version of the Music app that looks exactly the same as the Music app, but doesn’t let you do all the same things. (I, for example, can never start playing a different song on the HomePod using this interface.)

Meanwhile, all non-HomePod smart speakers still maintain the old method of being treated as external speakers. The way this works is, of course, complicated, but whatever the answer is, it isn’t this. Here’s hoping iOS 17 brings some much-needed revisions to the way AirPlay works—or, more accurately, doesn’t.

[ad_2]

Source link